Each resource in AWS has an Amazon Resource Name (ARN). An ARN is a unique identifier of your resource. Its value has no duplicate in other accounts and only exists in your account.

It’s used especially in IAM policies where you set which resources you will allow access to.

You can actually predict the ARN of an S3 Bucket since it has a standard format of arn:aws:s3:::S3_BUCKET_NAME.

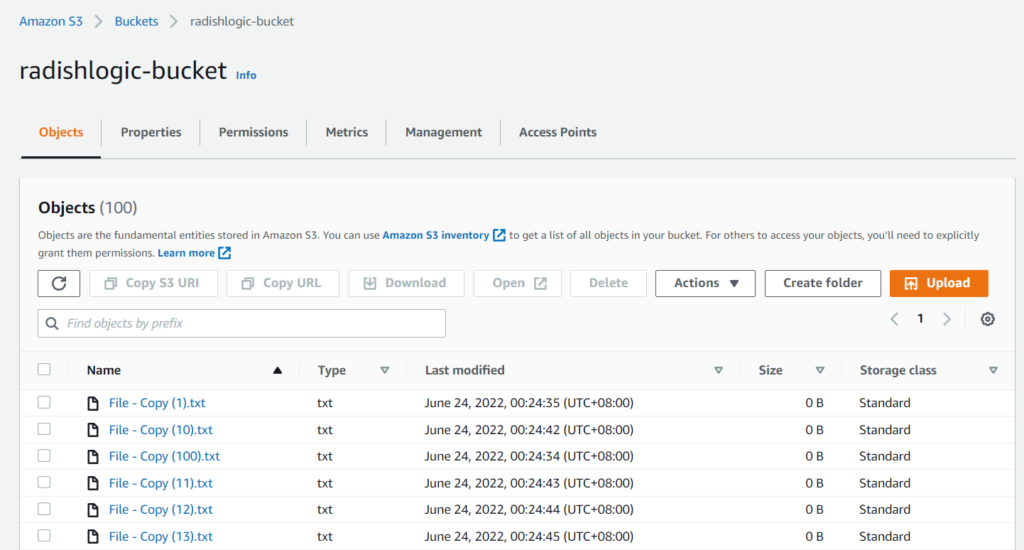

But if you are like me who is afraid of making a mistake typing the S3 bucket ARN, I prefer going to the AWS Console, searching for the S3 Bucket ARN, and copy-pasting it.

Follow the instructions below to get the S3 Bucket ARN.

Continue reading How to get the ARN of an S3 Bucket